Feds flag human services grants shakeup

Posted on 15 Dec 2025

The federal government is trialling longer-term contracts for not-for-profits that deliver…

Posted on 20 Jun 2024

By Jen Riley, chief impact officer, SmartyGrants

I recently attended a half-day series of talks titled "Impact evaluation: assessing the effectiveness of Australian public policy," presented by the Australian Centre for Evaluation (ACE).

Charities Minister Andrew Leigh set the tone for the event by reflecting on the idea that sometimes truths are thought to be so self-evident that they transcend the need for evidence. He gave the example of a policy of threatening parents with the loss of income support if their children did not attend school. This policy passed the pub test, but when it was evaluated, it did not produce the results the program had expected. This was Leigh’s way of highlighting the importance of rigorous impact evaluations to verify the effectiveness of policy interventions.

As I sat through the other presentations during the four-hour session, I kept thinking about the implications for grantmakers. If the government’s preferred approach involves randomised controlled trials (RCTs) and quasi-experimental designs, what does this mean for grantmakers?

Several key messages emerged from the showcase.

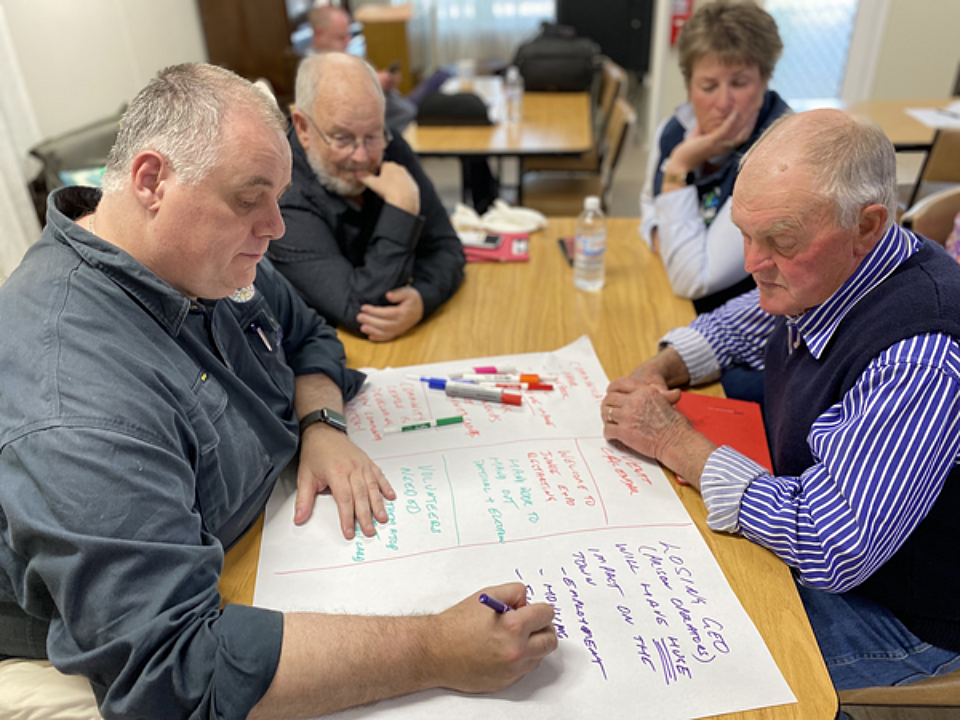

Use evidence to inform your programs, but keep in mind that local context is crucial. Simply adopting an approach without considering local conditions can be problematic. Best practice involves monitoring and evaluating the effectiveness of interventions to ensure they are not causing harm and are achieving the expected results.

More tips on using evidence:

Base your grants criteria on evidence: When selecting initiatives to fund, ensure they are based on proven evidence.

Evaluations are critical for several reasons: verifying whether interventions created intended benefits, ensuring no harm, maintaining accountability, learning for future funding, and contributing to the broader evidence base of what works.

However, post-grant evaluations often occur too late to be useful. The director of the Centre for Community Child Health at the Royal Children’s Hospital in Melbourne, Professor Sharon Goldfeld, highlighted the importance of lead indicators versus lag indicators. Lead indicators predict future outcomes (e.g., the number of people wearing safety gear), while lag indicators reflect past events (e.g. the number of workplace accidents).

Choose indicators with a strong evidence base that can serve as proxies for long-term success. Mid-term evaluations are also useful for making course corrections during the grant period.

Grant managers can support rigorous evaluations, such as randomised controlled trials, by designing grant programs to facilitate such evaluations. Here are some practical examples:

This approach requires a shift in mindset, funding for data collection, and changes in processes. However, it provides valuable insights into what works and what doesn’t. It is important not to penalise programs that didn’t work or returned null results but to continue working with grantees to refine and improve interventions over time, as Professor Sharon Goldfeld emphasised.

By adopting these practices, grant managers can enhance the effectiveness of their programs and contribute to a stronger evidence base, ultimately leading to better outcomes for communities.

Posted on 15 Dec 2025

The federal government is trialling longer-term contracts for not-for-profits that deliver…

Posted on 15 Dec 2025

A Queensland audit has made a string of critical findings about the handling of grants in a $330…

Posted on 15 Dec 2025

The federal government’s recent reforms to the Commonwealth procurement rules (CPRs) mark a pivotal…

Posted on 15 Dec 2025

With billions of dollars at stake – including vast sums being allocated by governments –grantmakers…

Posted on 15 Dec 2025

Nearly 100 grantmakers converged on Melbourne recently to address the big issues facing the…

Posted on 10 Dec 2025

Just one-in-four not-for-profits feels financially sustainable, according to a new survey by the…

Posted on 10 Dec 2025

The Foundation for Rural & Regional Renewal (FRRR) has released a new free data tool to offer…

Posted on 10 Dec 2025

A major new report says a cohesive, national, all-governments strategy is required to ensure better…

Posted on 08 Dec 2025

A pioneering welfare effort that helps solo mums into self-employment, a First Nations-led impact…

Posted on 24 Nov 2025

The deployment of third-party grant assessors can reduce the risks to funders of corruption,…

Posted on 21 Oct 2025

An artificial intelligence tool to help not-for-profits and charities craft stronger grant…

Posted on 21 Oct 2025

Artificial intelligence (AI) is becoming an essential tool for not-for-profits seeking to win…